Revolutionizing Data Preparation with LLMs: Automating ETL Processes for Faster Insights

How LLMs Are Revolutionizing Data Preparation and ETL Processes for Better Insights

Common Challenges in Snowflake Data Pipelines

How LLMs Automate Data Preparation

- Data Cleaning

Removing duplicate records, updating missing values, and fixing dataset inconsistencies.

Example: Standardising formats and correcting typographical errors to clean unstructured text data, such as customer feedback.

- Data Transformation

Using natural language processing (NLP) to transform unstructured data into structured formats.

Example: Collect important information from invoices, like dates, amounts, and vendor names, and structure it in tables.

- Entity Recognition and Classification

Identifying anything in the text, including names, dates, places, or health conditions.

Example: Taking diagnosis codes and patient details out of medical records.

- Automated Mapping of Schemas

LLMs can map diverse data schemas from multiple sources into a single, unified data schema without manual intervention.

- Contextual Understanding

By employing context to analyse ambiguous data, LLMs can increase the accuracy of data preparation tasks.

- Natural Language Queries

By merely stating their requirements in simple terms, LLMs let users to query data or generate transformation scripts.

Benefits of Using LLMs for Data Preparation

- Reduced Manual Intervention

Automating repetitive tasks like data cleaning and transformation saves a lot of time and reduces human error possibilities.

- Scalability

Large datasets from a variety of sources, such as text, photos, and semi-structured files, can be handled using LLMs.

- Quick Access to Insights

The time between data ingestion and useful insights is significantly reduced by automating ETL processes.

- Cost-Effectiveness

Operational costs are reduced when there is less reliance on specialised labour for data preparation tasks, and combining LLM-driven automation with offshore data services can further streamline workflows cost-effectively.

- Flexibility

LLMs can be adjusted to meet the demands of an industry, thereby increasing accuracy and performance.

Real-World Examples

JPMorgan Chase

JPMorgan Chase uses LLMs to process enormous volumes of financial documents for fraud detection, risk assessment, and compliance.

Challenge: The bank faced a difficult task that was prone to human error. It was extracting specific data from financial records, transaction logs, and legal documents.

Solution: To extract actionable insights such as interest rates, terms of payment, and other compliance issues, LLMs were used to scan documents. After that, these findings were prepared for reporting and analysis.

Outcome: By drastically cutting down on the amount of time needed for document analysis, JPMorgan Chase was better able to control risks and adhere to legal requirements.

Zurich Insurance

Challenge: It took a lot of manual work to process hundreds of insurance claims from handwritten notes and scanned documents.

Solution: Zurich Insurance used LLMs to extract information from unstructured claim forms, including dates, customer information, and claim amounts. For analysis, the retrieved data was automatically cleaned and organised.

Outcome: By cutting processing time by 80%, the automation accelerated claim approvals and raised customer satisfaction.

Unilever

Challenge: Unilever’s supply chain depended on data from multiple sources, often in inconsistent formats, including supplier records, invoices, and logistics reports.

Solution: From these documents, an LLM-driven system standardised them into a central database by extracting important information including supplier IDs, shipment dates, and quantities.

Outcome: Unilever was able to make proactive decisions and cut inventory expenditures by gaining real-time supply chain visibility.

Walmart

Challenge: To maximise product placements and pricing strategies, Walmart has to analyse enormous volumes of transaction data and customer feedback.

Solution: LLMs were implemented to process reviews, extract keywords, and classify consumer sentiments. Transaction logs were also compiled and cleaned to analyse trends.

Outcome: Walmart was able to improve consumer satisfaction, dynamically modify pricing, and identify underperforming products with the help of these insights.

Mayo Clinic

By incorporating LLMs into their data pipeline, they expedited the extraction of clinical insights from unstructured data such as medical records and physician notes.

Challenge: The Mayo Clinic needed to analyse millions of medical records to improve treatment techniques. However, 80% of their data was in unstructured text format, which made typical ETL operations time-consuming and error-prone.

Solution: Using GPT-based LLMs, the clinic automated the extraction of important information such as patient demographics, diagnosis codes, treatment plans, and outcomes. The LLMs converted raw text into structured data types that worked with their analytics systems.

Outcome: Automation reduced data preparation time by 70%, allowing researchers to analyse trends and improve treatment procedures and patient outcomes.

LLMs in ETL Pipelines

Every step of the ETL pipeline can benefit greatly from the use of LLMs:

- Extract

Parsing scanned documents, photos, and unstructured text.

Example: Retrieving client information from insurance claims PDF forms.

- Transform

Transforming unstructured data into a format suitable for analysis.

Example: Cleaning and classifying user reviews from e-commerce sites.

- Load

Loading cleansed data into databases or visualisation programs through mapping.

Example: Loading organised medical data into a business intelligence dashboard.

Tools and Technologies

LLMs have been adopted by several tools and organisations to automate data preparation:

- Databricks

Within its Lakehouse platform, Databricks provides LLM-based solutions for organising and cleaning large amounts of data.

- Azure Synapse by Microsoft

Offers AI-powered tools for automating ETL procedures, such as LLM integrations.

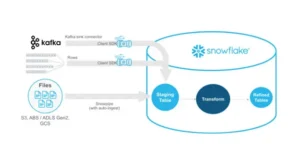

- Snowflake

Simplifies data transformation and schema matching operations with AI and LLMs.

- Hugging Face and OpenAI

Offer APIs for NLP-based data preparation that can be included in custom ETL processes.

Conclusion

Businesses that use automation driven by LLM will have a competitive advantage, maximising the value of their data while saving time and money. Partnering with providers of offshore data services can accelerate implementation and provide access to skilled teams familiar with LLM integration.

If you’re ready to embark on this journey and need expert guidance, subscribe to our newsletter for more tips and insights, or contact us at Offsoar to learn how we can help you build a scalable data analytics pipeline that drives business success. Let’s work together to turn data into actionable insights and create a brighter future for your organization.

How LLMs Are Revolutionizing Text Mining and Data Extraction from Unstructured Data

Leveraging LLMs for Advanced Text Mining and Data Extraction from Unstructured Data Since digital transformation is growing exponentially, businesses generate huge amounts of unstructured data from sources like emails, PDFs,

How Businesses Use LLMs for Competitive Intelligence to Stay Ahead of the Curve

How Businesses Use LLM’s for Data-Driven Competitive Intelligence to stay ahead of the curve Competitive intelligence (CI) is essential for keeping a competitive edge in today’s fast-paced business world. Businesses

Maximizing Cost-Efficient Performance: Best Practices for Scaling Data Warehouses in Snowflake

Maximizing Cost-Efficient Performance: Best Practices for Scaling Data Warehouses in Snowflake Organizations rely on comprehensive data warehouse solutions to manage substantial volumes of data while ensuring efficiency and scalability. Snowflake,

Comprehensive Guide to Implementing Effective Data Governance in Snowflake

Mastering Data Governance with Snowflake: A Comprehensive Guide Data governance is a systematic way to manage, organize, and control data assets inside an organization. This includes developing norms and policies

Efficiently Managing Dynamic Tables in Snowflake for Real-Time Data and Low-Latency Analytics

Managing Dynamic Tables in Snowflake: Handling Real-Time Data Updates and Low-Latency Analytics In this data-driven environment, businesses aim to use the potential of real-time information. Snowflake’s dynamic tables stand out

Mastering Data Lineage and Traceability in Snowflake for Better Compliance and Data Quality

Mastering Data Lineage and Traceability in Snowflake for Better Compliance and Data Quality In data-driven businesses, comprehending the source, flow, and alterations of data is essential. Data lineage is essential