Harnessing LLMs for Real-Time Data Analysis and Smarter Decision-Making in Business

Harnessing the Power of LLMs for Real-Time Data Analysis and Decision-Making Across Industries

How LLMs Handle Real-Time Data

Interpret Live Data Streams: Real-time feeds and APIs can utilize LLMs to analyse structured and unstructured data instantly.

Generate Insights: LLMs may help organisations to easily understand complex data by identifying trends, correlations and patterns.

Support Decision-Making: Natural language outputs from LLMs help decision-makers act on data-driven insights.

Applications Across Industries

LLMs help companies gain important insights that inform decisions, enhance marketing tactics, and enhance the general customer experience by analysing huge amounts of unstructured data, including social media posts, customer reviews, and comments. The list of well-known businesses that have effectively incorporated LLMs into their operations is provided below.

1. Finance: Bloomberg

It’s all about timeliness and precision in the finance world. Rapid data processing is required so that analysts, traders, managers, and other resourceful people can make well-informed decisions.

It’s all about timeliness and precision in the finance world. Rapid data processing is required so that analysts, traders, managers, and other resourceful people can make well-informed decisions.

Evaluation of Risk: Looking at market data breaking news and geopolitical events, an LLM can detect stocks that are susceptible to volatility.

Predictions: Traditionally, LLMs can predict the movement and the market trends of price, focusing on past patterns of trading data.

Case Study: Bloomberg, one of the biggest service providers of financial data, brought up BloombergGPT, a finance-focused LLM. News, market data, and financial documents are processed, and that helps professionals make quicker and better judgements. Unlike most standard GPT models, BloombergGPT facilitates it due to its ability to handle data in real-time and excels at, for instance, trend prediction, sentiment analysis, etc.

2. Retail: Amazon

Use Case: LLMs can be used by inventory control and dynamic pricing Retailers to capture real-time sales data, competitor pricing, and customer social media reactions. This enables:

Dynamic pricing: completely optimizing product prices based on market demand and competitor action.

Optimized Inventory: Knowing in advance which products will sell out so you can quickly restock them.

3. Healthcare: Mayo Clinic

Identify Anomalies: Inform medical professionals about unusual patterns, such as irregular heartbeats or early infection symptoms.

Provide Treatment Recommendations: Offer evidence-based treatment recommendations based on patient data.

Technical Foundations for Real-Time LLM Applications

- Data Pipelines

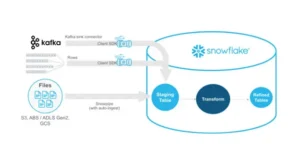

Real-time data is collected via APIs, Internet of Things devices, or streaming platforms like Apache Kafka or AWS Kinesis to ensure uninterrupted data flow to the LLM.

- Optimisation of the Model

LLMs are refined using domain-specific datasets to ensure relevance. This enhances the model’s accuracy and effectiveness for specific use situations.

- Minimal Latency

Optimised hardware, like GPUs or TPUs, and effective deployment on edge devices or hybrid cloud settings are necessary for processing real-time data to reduce latency.

- Context Maintenance

By allowing LLMs to maintain context during a series of data interactions, frameworks such as LangChain enhance their capacity to produce insightful findings in dynamic situations.

Challenges in Real-Time LLM Deployment

Data Volume and Speed: Models and infrastructure that can manage large data volumes and velocity without bottlenecks are required for real-time analysis.

Accuracy and Bias: Using biased or insufficient datasets to train LLMs might result in predictions that are not accurate, which is especially dangerous in industries like healthcare and finance.

Interpretability: Non-technical stakeholders may find it difficult to comprehend LLM-generated insights because of their complexity.

Resource Intensity: Real-time processing might demand a lot of resources, requiring economical techniques like distillation or model compression.

Future of LLMs in Real-Time Analysis

- Integration of Predictive Analytics

Combining LLMs with predictive algorithms will improve their ability to predict industry trends like manufacturing and logistics.

- Domain-Specific Models

Creating specialised LLMs for particular industries, like legal or energy, will improve accuracy and application relevance.

- XAI, or explainable AI

Developments in XAI will enhance the interpretability of LLM-driven insights, promoting user confidence as the need for transparency increases.

- Sustainability

Efforts to optimise LLMS for energy efficiency will address concerns over their environmental impact and running expenses.

Data Volume and Speed: Models and infrastructure that can manage large data volumes and velocity without bottlenecks are required for real-time analysis.

Accuracy and Bias: Using biased or insufficient datasets to train LLMs might result in predictions that are not accurate, which is especially dangerous in industries like healthcare and finance.

Interpretability: Non-technical stakeholders may find it difficult to comprehend LLM-generated insights because of their complexity.

Resource Intensity: Real-time processing might demand a lot of resources, requiring economical techniques like distillation or model compression.

Conclusion

Addressing issues like bias, accuracy, and resource intensity as technology advances will enable LLMs to reach their full potential and open the door to quicker, smarter, and better-informed decision-making in a data-driven society. To accelerate this transformation, businesses should consider hiring experienced LLM developers who can design, fine-tune, and integrate custom solutions aligned with their unique data goals.

How LLMs Are Revolutionizing Text Mining and Data Extraction from Unstructured Data

How Businesses Use LLMs for Competitive Intelligence to Stay Ahead of the Curve

Maximizing Cost-Efficient Performance: Best Practices for Scaling Data Warehouses in Snowflake

Implementing Snowflake Data Governance for Scalable Data Security