Explainable AI (XAI): Building Trust and Transparency in Artificial Intelligence

Explainable AI (XAI): Why Transparency in AI Models is More Important Than Ever

In a world dominated by algorithms and machine learning, the mysterious inner workings of Artificial Intelligence (AI) might feel like an enigma wrapped in a riddle. How can we use these powerful technologies clearly and responsibly as businesses and consumers traverse the digital landscape? Here comes Explainable AI (XAI), a light on the complexity.

Imagine a future where AI answers questions and explains its decisions. XAI wants to turn AI’s “black box” into a transparent vessel of information so stakeholders from tech developers to common users can comprehend, trust, and exploit its potential. We’ll discover XAI’s crucial role in creating a more responsible AI world where trust and understanding enable innovation. Explore why transparency is a requirement in the age of artificial intelligence with us.

What is Explainable AI (XAI)?

Explainable artificial intelligence (XAI) is a collection of techniques meant to help people understand the decision-making process of artificial intelligence algorithms. Many artificial intelligence models, including deep learning algorithms, are typically quite complicated and operate as “black boxes,” meaning their decision-making processes are practically hard to explain. XAI removes this barrier by clarifying and transparently guiding AI judgments. Imagine, for instance, a financial institution evaluating loan applications using artificial intelligence. The bank might not be able to explain why a given application was allowed or denied using a black-box artificial intelligence algorithm. Conversely, XAI would provide an analysis of the main elements influencing the decision, including salary, credit score, or work experience. This guarantees that judgments are taken ethically and fairly as well as builds confidence in artificial intelligence.

The Growing Demand for Transparency in AI

Starting with ethical problems, the need for transparent artificial intelligence has been expanding quickly for numerous reasons. Bias in artificial intelligence models is one of the main problems as it may cause unequal treatment of people. For example, biased facial recognition algorithms have been found to have higher mistake rates when recognizing persons of race, therefore posing major ethical and societal issues. By letting users comprehend the logic underlying AI judgments and fix any prejudices, XAI helps to detect and eliminate such biases.

Regulatory pressure is another force fueling demand. Laws such as the General Data Protection Regulation (GDPR) of the European Union demand companies to justify any automated decisions affecting people. Likewise, especially in high-risk sectors like healthcare and banking, the forthcoming EU Artificial Intelligence Act would enforce tougher rules for AI openness. These rules are forcing companies to use XAI solutions to remain legal risk-free and comply.

Yet another important element is public trust. Surveys indicate that, with more justification for how it operates, 67% of people would trust artificial intelligence. This makes transparency a commercial need as well as a legal or moral one. Lack of transparency might greatly slow down the acceptance of artificial intelligence in sectors like banking and healthcare, where trust is of great importance.

Advantages of XAI for Stakeholders

XAI presents various advantages for distinct stakeholders:

- Data Scientists: XAI helps data scientists to more quickly debug and enhance AI models. Understanding which factors or variables affect the output of a model helps one to adjust it to raise fairness and accuracy.

- Businesses: Transparency fosters trust. Users are more inclined to trust the system when they can understand the reasons behind certain decisions made by a model. In e-commerce, for instance, open product suggestions grounded in reasonable criteria such as purchasing behavior will increase user engagement and satisfaction. Many organizations are also exploring outsourcing AI transparency management to specialized providers who can help implement explainability tools and maintain compliance.

- Regulators and Compliance Officers: XAI helps Regulators and Compliance Officers manage AI systems and satisfy legal criteria more easily. Explainable AI provides clear, easily available information about the decision-making process if a company is audited, therefore facilitating legislation compliance, including GDPR.

- End Users: Understanding how these judgments are made helps end users, whether they be customers, patients, or workers, more likely to embrace AI-driven choices. This increasing awareness helps to lower artificial intelligence-related mistrust and anxiety.

XAI Techniques and Approaches

Several methods exist in artificial intelligence models to reach explainability:

- Model-Agnostic Techniques: Any artificial intelligence model can benefit from model-agnostic techniques such as LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (Shapley Additive Explanations) to offer an understanding of why particular decisions were taken. LIME, for instance, operates by gently modifying the input data and tracking how the model’s predictions vary. Conversely, SHAP values provide each feature a contribution score for the output that results.

- Glass-Box Models: Glass-box models are those with natural interpretability. Rule-based systems, linear regression, and decision trees are a few instances. Although these models are less potent than deep learning models, their simplicity makes them perfect for uses where transparency is vital.

- Hybrid Models: Some companies balance performance and transparency by combining extensive black-box models with explainable layers. A deep learning model, for example, can be paired with a simpler model that offers justification for high-stakes judgments such as loan approvals or medical diagnoses.

Challenges and Limitations of XAI

XAI has its own set of difficulties even if it has numerous advantages. The trade-off between complexity and interpretability is one of the problems. The more complicated AI models get, the more difficult their decision-making procedures get to explain. On the other side, simplifying the explanations can occasionally result in oversimplification, which would not convey all the required information for significant decisions. Certain sectors also have particular difficulty implementing XAI. In healthcare, for instance, both accuracy and interpretability are vital. A medical diagnosis with too simplistic explanations runs the risk of misunderstanding and bad results. Finally, XAI is an area of continuing development. Although LIME and SHAP are gaining popularity, they are not flawless and researchers are always striving to make these techniques better to guarantee they offer correct and significant explanations.

Conclusion

Explainable artificial intelligence (XAI) is a need rather than a luxury now. Transparency is essential to guarantee that decisions made by artificial intelligence systems in different sectors are moral, fair, and reliable as they occupy more important positions in decision-making. XAI delivers opportunities that need cautious navigation even as it gives stakeholders the tools they need to grasp, trust, and properly govern artificial intelligence. Businesses that invest in XAI will not only be better positioned to satisfy legal obligations but also get a competitive edge by developing closer ties with their consumers as public demand for transparency and rising regulatory pressure call for. Outsourcing AI transparency management can further help organizations accelerate adoption and ensure their explainability practices keep pace with evolving standards.

How LLMs Are Revolutionizing Text Mining and Data Extraction from Unstructured Data

How Businesses Use LLMs for Competitive Intelligence to Stay Ahead of the Curve

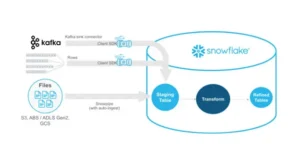

Maximizing Cost-Efficient Performance: Best Practices for Scaling Data Warehouses in Snowflake

Implementing Snowflake Data Governance for Scalable Data Security